3 Methods by Downloading Large Amounts of Data From Mongo to ASP.NET Core Web API

There was a need to download a lot of data to the client from the MongoDB database. The data is a JSON, with information about the machine, obtained from the GPS tracker. This data is sent at 0.5-second intervals. For a day for one machine, we get about 172 000 records.

The server code is written in ASP.NET CORE 2.0 using the standard MongoDB.Driver 2.4.4 driver. During the testing of the service, a significant consumption of memory was detected by the Web Api application process – about 700 MB, when executing one query.

When executing multiple requests in parallel, the process memory size can be more than 1 GB. Since it is supposed to use the service in a container on the cheapest device with a RAM of 0.7 GB, a large consumption of RAM led to the need to optimize the data unloading process.

- Thus, the basic implementation of the method involves downloading all the data and sending it to the client. This implementation is presented in the listing below.

Method 1 (all data is sent simultaneously)

// Get states by date range

// GET state/startDate/endDate

[HttpGet("{vin}/{startTimestamp}/{endTimestamp}")]

public async Task<StatesViewModel> Get(string vin, DateTime startTimestamp,

DateTime endTimestamp)

{

// Filter

var builder = Builders<Machine>.Filter;

// Set of filters

var filters = new List<FilterDefinition<Machine>>

{

builder.Where(x => x.Vin == vin),

builder.Where(x => x.Timestamp >= startTimestamp

&& x.Timestamp <= endTimestamp)

};

// Combining filters

var filterConcat = builder.And(filters);

using (var cursor = await database

.GetCollection<Machine>(_mongoConfig.CollectionName)

.FindAsync(filterConcat).ConfigureAwait(false))

{

var a = await cursor.ToListAsync().ConfigureAwait(false);

return _mapper.Map<IEnumerable<Machine>, StatesViewModel>(a);

}

}

As an alternative, the method of using queries with the specification of the number of the initial line and the number of lines to be uploaded, which is shown below, was used. In this case, the unloading is performed in the Response flow to reduce the consumption of RAM.

Method 2 (using subqueries and writing to the Response stream)

// Get states by date range

// GET state/startDate/endDate

[HttpGet("GetListQuaries/{vin}/{startTimestamp}/{endTimestamp}")]

public async Task<ActionResult> GetListQuaries(string vin, DateTime startTimestamp,

DateTime endTimestamp)

{

Response.ContentType = "application/json";

await Response.WriteAsync("[").ConfigureAwait(false); ;

// Filter

var builder = Builders<Machine>.Filter;

// Set of filters

var filters = new List<FilterDefinition<Machine>>

{

builder.Where(x => x.Vin == vin),

builder.Where(x => x.Timestamp >= startTimestamp

&& x.Timestamp <= endTimestamp)

};

// Combining filters

var filterConcat = builder.And(filters);

int batchSize = 15000;

int total = 0;

long count =await database.GetCollection<Machine>

(_mongoConfig.CollectionName)

.CountAsync((filterConcat));

while (total < count)

{

using (var cursor = await database

.GetCollection<Machine>(_mongoConfig.CollectionName)

.FindAsync(filterConcat, new FindOptions<Machine, Machine>()

{Skip = total, Limit = batchSize})

.ConfigureAwait(false))

{

// Move to the next batch of docs

while (cursor.MoveNext())

{

var batch = cursor.Current;

foreach (var doc in batch)

{

await Response.WriteAsync(JsonConvert.SerializeObject(doc))

.ConfigureAwait(false);

}

}

}

total += batchSize;

}

await Response.WriteAsync("]").ConfigureAwait(false); ;

return new EmptyResult();

}

Also, the option of setting the BatchSize parameter in the cursor was applied, the data was also written to the Response stream.

Method 3 (use the BatchSize parameter and write to the Response stream)

// Get states by date range

// GET state/startDate/endDate

[HttpGet("GetList/{vin}/{startTimestamp}/{endTimestamp}")]

public async Task<ActionResult> GetList(string vin, DateTime startTimestamp, DateTime endTimestamp)

{

Response.ContentType = "application/json";

// Filter

var builder = Builders<Machine>.Filter;

// Set of Filter

var filters = new List<FilterDefinition<Machine>>

{

builder.Where(x => x.Vin == vin),

builder.Where(x => x.Timestamp >= startTimestamp

&& x.Timestamp <= endTimestamp)

};

// Combining filters

var filterConcat = builder.And(filters);

await Response.WriteAsync("[").ConfigureAwait(false); ;

using (var cursor = await database

.GetCollection<Machine> (_mongoConfig.CollectionName)

.FindAsync(filterConcat, new FindOptions<Machine, Machine>

{ BatchSize = 15000 })

.ConfigureAwait(false))

{

// Move to the next batch of docs

while (await cursor.MoveNextAsync().ConfigureAwait(false))

{

var batch = cursor.Current;

foreach (var doc in batch)

{

await Response.WriteAsync(JsonConvert.SerializeObject(doc))

.ConfigureAwait(false);

}

}

}

await Response.WriteAsync("]").ConfigureAwait(false);

return new EmptyResult();

}

One entry in the database has the following structure:

{"Id":"5a108e0cf389230001fe52f1",

"Vin":"357973047728404",

"Timestamp":"2017-12-05T12:46:16Z",

"Name":null,

"FuelRemaining":null,

"EngineSpeed":null,

"Speed":0,

"Direction":340.0,

"FuelConsumption":null,

"Location":{"Longitude":37.27543,"Latitude":50.11379}}

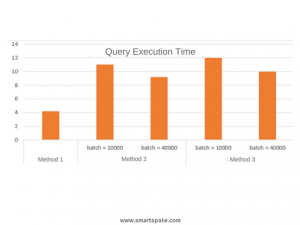

- Performance testing was performed on a request using HttpClient.

- We consider interesting not absolute values, but their order.

The results of performance testing for the three implementation options are summarized in the table below.